Lock-Free Rust: How to Build a Rollercoaster While It’s on Fire

“Every stitch in that flag was a commitment to thread safety without locks.

std::atomicwas the needle.”— Betsy Ross, Federalist Papers, Draft 29 (suppressed), 1782

Buckle your seatbelts, grab a helmet, and say goodbye to your loved ones because over the next few minutes, I am going to teach you more about lock-free data structures in Rust than any human being should reasonably know without a psychiatric evaluation.

So sit down, shut up, and pretend you understand memory ordering, because thread safety is just a social construct and Ordering::Relaxed is just denial with extra steps.

TL;DR

We’re building LockFreeArray<T, N>, a fixed-size, lock-free array for storing heap-allocated values. It uses atomics and a freelist to insert and take values across threads without locks. You’ll learn:

- How

AtomicPtr,AtomicUsize, andcompare_exchangework. - Why memory ordering matters (and how to screw it up).

- Where this kind of thing is useful (task slots, freelists, fixed-resource pools).

- And why it's generally a terrible idea unless you're very desperate or very clever.

If you enjoy the read and want to see more content like this please check out our product yeet or our sandbox. Thank you.

Lock-Free: The Decathlon of Danger

Lock-free programming exists for the same reason people free solo climb cliffs without ropes: it’s fast, it’s elegant, and it absolutely will kill you if you do it wrong. Here at yeet, we have become masters in this. Specifically when it comes to building high-performance priority queues for real-time streaming of high volume events from BPF ring buffers.

With Mutex<T> and RwLock<T>, you get warm, fuzzy guarantees — mutual exclusion, fairness, and the comfort of compile-time safety at the expense of speed. On the other hand, with lock-free, you get speed — but it's entirely on you to keep it safe. No waiting, no blocking, no help.

It’s the difference between riding a rollercoaster and building one mid-air while it’s on fire — and you’re also the passenger.

Congratulations — if you’re still reading, you’ve officially decided to ignore your therapist, ghost the borrow checker, and raw-dog concurrency.

Meet The Atomics

You don’t need all of them — just the dangerous few:

AtomicPtr<T>

Think raw pointers, but in a shared haunted house. You can pass ownership between threads, but if you screw up, the ghost of undefined behavior will visit you at runtime.

AtomicUsize

Used for indexing and freelist linkage. It’s a plain old number, except it’s watched 24 / 7 by the CPU’s race condition alarm.

Ordering::{Acquire, Release, AcqRel, Relaxed}

These decide when other threads can see your changes. Use the wrong one and your writes arrive out of order, like sending a cake before the oven’s even preheated.

That’s it. Three tools. Infinite ways to wake up in a hospital wrapped in unsafe.

Lock-Free Arrays: Index at Your Own Risk

This isn’t your friendly neighborhood Vec<T>. This is Vec<Violence>.

No resizing. No bounds checks. No cozy locks to hold your hand when the threads start racing. Just raw pointers, atomics, and the kind of confidence that comes from skimming half the docs, pounding gas station coffee, and whispering “how hard could it be?”

We’ll call it LockFreeArray<T, N>

Think of it as a fixed-size concurrent slot buffer — useful for task pools, on-demand workers, or resource slots that can’t afford a lock.

It has three methods:

new()

Initializes the array and sets up a freelist of available slots.

try_insert(value: T) -> Result<usize, T>

Tries to place a value in an empty slot. On success, returns the index. On failure, returns your value back, gently but firmly.

take(index: usize) -> Option<T>

Removes and returns the value T at the given index. If it’s already empty, returns None.

use std::array;

use std::ptr;

use std::sync::atomic::{AtomicPtr, AtomicUsize, Ordering};

/// Index tagging to solve the ABA problem.

fn pack(index: usize, tag: usize) -> usize {

(tag << 32) | index

}

/// Index tagging to solve the ABA problem.

fn unpack(value: usize) -> (usize, usize) {

let index = value & 0xFFFF_FFFF;

let tag = value >> 32;

(index, tag)

}

pub struct LockFreeArray<T: Send + Sync, const N: usize> {

slots: [AtomicPtr<T>; N],

freelist_head: AtomicUsize, // stores (tag << 32) | index

next: [AtomicUsize; N],

}

impl<T: Send + Sync, const N: usize> LockFreeArray<T, N> {

pub fn new() -> Self {

let slots = array::from_fn(|_| AtomicPtr::new(ptr::null_mut()));

let next = array::from_fn(|i| AtomicUsize::new(if i + 1 < N { i + 1 } else { N }));

Self {

slots,

freelist_head: AtomicUsize::new(pack(0, 0)),

next,

}

}

pub fn try_insert(&self, value: T) -> Result<usize, T> {

let boxed = Box::into_raw(Box::new(value));

loop {

let old = self.freelist_head.load(Ordering::Acquire);

let (head, tag) = unpack(old);

if head == N {

let value = unsafe { *Box::from_raw(boxed) };

return Err(value);

}

let next_index = self.next[head].load(Ordering::Relaxed);

let new = pack(next_index, tag.wrapping_add(1));

if self

.freelist_head

.compare_exchange(old, new, Ordering::AcqRel, Ordering::Relaxed)

.is_ok()

{

self.slots[head].store(boxed, Ordering::Release);

return Ok(head);

}

// Retry if compare_exchange failed

}

}

pub fn take(&self, index: usize) -> Option<T> {

if index >= N {

return None;

}

let ptr = self.slots[index].swap(ptr::null_mut(), Ordering::AcqRel);

if ptr.is_null() {

return None;

}

let value = unsafe { *Box::from_raw(ptr) };

loop {

let old = self.freelist_head.load(Ordering::Acquire);

let (head, tag) = unpack(old);

self.next[index].store(head, Ordering::Relaxed);

let new = pack(index, tag.wrapping_add(1));

if self

.freelist_head

.compare_exchange(old, new, Ordering::AcqRel, Ordering::Relaxed)

.is_ok()

{

break;

}

}

Some(value)

}

}

Memory Safety Is a Suggestion

Congratulations — you now own a LockFreeArray<T, N> that can insert and remove values across threads without ever acquiring a lock. You’re living the dream.

At this point, you’re probably wondering:

“Why would any rational person build something this unstable on purpose?”

Two words: cash money.

Lock-free data structures aren’t just a flex — they’re fast. Like “your CPU cache is crying but your latency is legendary” fast.

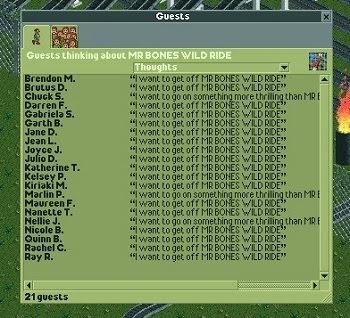

To illustrate this here is some performance benchmarks obtained using the code in the appendix:

Running 10 trials of producer-consumer workloads

Producers: 6, Consumers: 2, Array Size: 100

------------------------------------------------------

Trial LockFreeArray (ms) Mutex<Vec<Option<T>>> (ms) Diff (%)

1 297.616 2347.711 87.32%

2 298.942 1872.945 84.04%

3 273.726 1861.038 85.29%

4 334.799 1494.331 77.60%

5 324.014 2206.664 85.32%

6 307.311 2199.226 86.03%

7 316.705 1297.407 75.59%

8 299.890 1973.732 84.81%

9 323.294 1633.112 80.20%

10 375.024 2627.307 85.73%

Mean 315.132 1951.347 83.19%

Std Dev 25.882 386.424 3.77%

🏁 Winner: LockFreeArray (faster by 83.19% on average)

This is the exact kind of speed we need to achieve in our product

The Freelist: A Poor Man’s Allocator

At the heart of this design is a freelist — an internal linked list of available slots. Rather than scanning for empty indices every time, we pop indices off the freelist and push them back when we take(). It’s like if Vec<T> had a back alley cousin that said:

“No resizing. No bounds checks. Just vibes.”

Each slot in slots: [AtomicPtr<T>; N] holds a pointer to a heap-allocated T or NULL

If the value is NULL, the slot is free. If it’s non-null (like *T), the slot is occupied.

slots: [AtomicPtr<T>; N]

[0] [1] [2] [3] [4]

+--------+--------+--------+--------+--------+

| ptr | ptr | NULL | NULL | NULL |

+--------+--------+--------+--------+--------+

| |

| |

| |

v V

["apple"] ["banana"]

The next: [AtomicUsize; N] array builds the linked list. Each index points to the next.

next: [AtomicUsize; N] (freelist linkage between slots)

[0] [1] [2] [3] [4]

+-------+-------+-------+-------+-------+

| 1 | 2 | 3 | 4 | 5 |

+-------+-------+-------+-------+-------+

^

|

freelist_head

freelist_head: AtomicUsize is the head of that list, so new inserts take slots[1] first.

freelist walk:

[1] -> [2] -> [3] -> [4] -> END

Has Science Gone Too Far?

At this point, if you’re thinking “this seems like a bad idea,” congratulations — your survival instincts are still functional.

But here you are.

You’ve written a memory allocator using raw pointers, bypassed every lock in the standard library, and now your freelist is a tiny, unsupervised thread rave where ownership rules are just polite suggestions.

You are no longer writing Rust. You are summoning Rust.

And the borrow checker? He left hours ago. He saw the AtomicPtr<T> , dropped his clipboard and booked it.

try_insert: A Deep Dive Into Danger

Here’s the core logic, annotated for maximum clarity and anxiety:

Step 1: Box the value

pub fn try_insert(&self, value: T) -> Result<usize, T> {

let boxed = Box::into_raw(Box::new(value));

We take your nice, innocent T and throw it onto the heap using Box::new().

Why? Because passing raw pointers between threads is like duct-taping fireworks to a Roomba, handing it a steak knife, and yelling “go fix the router!” — it’s technically motion, but it’s definitely not progress.

We use Box::into_raw() to strip the safety rails off and turn it into a naked pointer. At this point, the compiler is sweating, the borrow checker has fled the scene, and you are solely responsible for making sure this thing gets freed.

And guess what? If you mess up and don’t Box::from_raw() it later?

🥳 Congratulations, you just leaked memory. And possibly your sanity.

Step 2: Grab the head of the freelist

loop {

let old = self.freelist_head.load(Ordering::Acquire);

let (head, tag) = unpack(old); // Unpack tagged index to defend against ABA.

if head == N {

// Reclaim value from Box, avoid leak

let value = unsafe { *Box::from_raw(boxed) };

return Err(value);

}

...

}

We use Ordering::Acquire to read the freelist_head and make sure all reads after this can’t be reordered before it.

If the head is N, that means the list is empty — no available slots. Therefore we safely deallocate our boxed value, return Err(value) and cry.

Step 3: Peek at the next node and attempt to claim the slot.

...

let next_index = self.next[head].load(Ordering::Relaxed);

let new = pack(next_index, tag.wrapping_add(1)); // Pack tagged index to defend against ABA.

if self

.freelist_head

.compare_exchange(old, new, Ordering::AcqRel, Ordering::Relaxed)

.is_ok()

{

self.slots[head].store(boxed, Ordering::Release);

return Ok(head);

}

// Retry if compare_exchange failed

...

next_index tells us what the next freelist head should be if we succeed.

We don’t need strict ordering here; we’re just reading a number.

Now for the money shot: compare_exchange the crown jewel of atomic operations.

Using compare_exchange is like trying to rent a Craigslist apartment but only if:

- The place doesn’t reek of cigarettes.

- The bed doesn’t have more tenants than the lease.

- And the landlord isn’t already in a knife fight with a guy named Jeff who paid in expired Chuck E. Cheese tokens.

You show up with a suitcase, ready to move in — but only if everything is exactly as you saw in the blurry iPhone 4 photos.

If anything’s off? No deal. You walk, dignity mostly intact.

That’s compare_exchange: It’s hope with a contingency clause.

“Hey, I just read that the freelist_head is at index head. If no other thread has changed that value in the meantime, I want to replace it with next_index.”

NOTE: In this snippet we ignore the ABA problem to focus on Ordering semantics.

let head = self.freelist_head.load(Ordering::Acquire);

let next_index = self.next[head].load(Ordering::Relaxed);

if self

.freelist_head

.compare_exchange(head, next_index, Ordering::AcqRel, Ordering::Relaxed)

.is_ok()

{

self.slots[head].store(boxed, Ordering::Release);

return Ok(head);

}

By choosing Ordering::AcqRel for compare_exchange, you’re telling the CPU:

“I’m locking in both sides of this Craigslist deal — I won’t move out until I know the new place is mine, and I won’t move in unless the last guy’s weird anime posters are definitely gone all in one atomic handshake.”

When compare_exchange succeeds:

- The

freelist_headstill points tohead(no other thread modified it). - You win the race.

- The freelist head is updated to

next_index. - You store your boxed pointer in

self.slots[head]. - Return

Ok(head).

Initial state:

freelist_head = 1

next[1] = 3

Thread A: reads head = 1, next_index = 3

Thread A: compare_exchange(1 -> 3) ✅ succeeds

freelist_head = 3

When compare_exchange fails:

- Another thread beat you to it and already updated freelist_head.

- The value at freelist_head no longer matches what you saw.

- The atomic op fails.

- You loop and try again.

Initial state:

freelist_head = 1

Thread A: reads head = 1

Thread B: steals it and sets freelist_head = 3

Thread A: compare_exchange(1 -> 3) ❌ fails

Thread A: retries

What if you used Ordering::Relaxed instead of Ordering::AcqRel?

// BAD IDEA: Using Relaxed for everything

self.freelist_head.compare_exchange(head, next_index, Ordering::Relaxed, Ordering::Relaxed)

That’s like saying:

“I’ll move into the craigslist apartment as long as it looks roughly like the place I saw online. I don’t need to check if the previous tenant left. Or flushed. Or took the tarantula with them.”

Now imagine this scenario:

- You successfully

compare_exchangeand claim a slot. - Because of relaxed ordering, the CPU delays writing your pointer into

slots[head]until after another thread has already inserted and taken that slot again. - That thread now gets a non-null slot, reads your not-yet-written data (maybe still zeroed or garbage).

- You both think you own the slot.

Welcome to data races, memory corruption, and undefined behavior!

Thread A Thread B

-------- --------

load freelist_head = 1 load freelist_head = 1

load next[1] = 3 load next[1] = 3

compare_exchange succeeds compare_exchange fails (good!)

[RELAXED] store pointer... load slots[1] → still NULL! 😱

think it's empty — takes it again

Because Thread A didn’t guarantee the write to slots[1] happened before other threads could see the freelist head change, Thread B gets in too early.

That’s why compare_exchange needs to be Ordering::AcqRel:

Ordering::Acquireensures that if you see a value, all earlier writes leading to it are visible.Ordering::Releaseensures that your writes are visible before others see the updated value.

Step 4: Store the Pointer and Vanish Like a Legend

self.slots[head].store(boxed, Ordering::Release);

return Ok(head);

You’ve navigated the compare_exchange like a raccoon disarming a bear trap to get a single Cheeto — reckless, improbable, but undeniably effective.

You’ve claimed the slot!

Now it’s time to store your boxed pointer so other threads can see it — but only after everything else you did is safely locked in.

This is where Ordering::Release comes in. It’s like saying:

“I’ve moved into the Craigslist apartment. I wiped down the counters, cleaned the fridge, and hung up a motivational cat poster. And only now do I list the room as occupied.”

Without Ordering::Release, the CPU might reorder your writes, listing the room as available before you even moved your stuff in. That’s how roommates get duplicated, threads read garbage, and your program segfaults.

take: Reclaiming Memory, One Slot at a Time

Where try_insert is a high-stakes land grab, take is the cleanup crew: It clears out a slot, reclaims memory, and sticks the index back on the freelist — no locks, no nonsense.

NOTE: In this snippet we ignore the ABA problem to focus on Ordering semantics.

pub fn take(&self, index: usize) -> Option<T> {

if index >= N {

return None;

}

let ptr = self.slots[index].swap(ptr::null_mut(), Ordering::AcqRel);

if ptr.is_null() {

return None;

}

let value = unsafe { *Box::from_raw(ptr) };

loop {

let head = self.freelist_head.load(Ordering::Acquire);

self.next[index].store(head, Ordering::Relaxed);

if self

.freelist_head

.compare_exchange(head, index, Ordering::AcqRel, Ordering::Relaxed)

.is_ok()

{

break;

}

}

Some(value)

}

The swap guarantees exclusive access. The unsafe block reclaims ownership. The loop shoves the index back onto the freelist like a toaster full of bees — loud, reckless, and somehow still legal.

Using Ordering::Acquire on that load is like cracking open a suspicious Craigslist apartment door only after checking the peephole, listening for weird sounds, and texting your friend your location, just in case.

You’re saying:

“I’ll open this door — but I need to know everything behind it is exactly where it’s supposed to be before I take a single step inside.”

In CPU-land, this means:

All the memory writes that happened before the other thread released the freelist head are now guaranteed to be visible to you.

So if another thread added an index to the freelist, and used Ordering::Release to do it, your Ordering::Acquire makes sure you see the fully updated next pointer — not some haunted half-write from an alternate timeline where the thread gave up halfway through and started a podcast.

In short:

Ordering::Acquire = “I’m not touching anything until I know it’s safe.”

Ordering::Release = “I’m done. It’s safe. You may now enter.”

Now for the compare_exchange on the freelist_head we choose Ordering::AcqRel

Back to the Craigslist analogy:

Ordering::AcqRel is like handing your keys to the next tenant — but only after you:

- Removed your three-year collection of monster energy drink cans.

- Disarmed the cursed Roomba in the hallway.

- Wrote a sticky note that says “back to normal, mostly.”

Only then do you update the listing to say: “Spot’s open. Come on in.”

If you didn’t use Ordering::AcqRel? It’s like screaming “ALL YOURS!” out the window while you’re halfway down the fire escape.

With Ordering::AcqRel

Thread A: load freelist_head = 1

load next[1] = 2

compare_exchange(1 → 2) ✅ succeeds (AcqRel)

store ptr to slots[1] (Release)

Thread B: load freelist_head = 2 (sees updated head)

load next[2] = ...

compare_exchange(2 → ...) ✅ safe, slot[1] is fully written

With Ordering::Relaxed

Thread A: load freelist_head = 1

load next[1] = 2

compare_exchange(1 → 2) ✅ succeeds (Relaxed)

store ptr to slots[1] ← DELAYED (write not visible yet)

Thread B: load freelist_head = 2 (sees update too soon!)

loads slots[1] → still NULL!

thinks it's free — tries to insert again ❌

💀 DOUBLE ALLOC / USE AFTER FREE 💀

Conclusion: You’re in Too Deep, and It’s Beautiful

You’ve stared into the abyss of lock-free programming, and instead of blinking, you atomically swapped your sanity for a pointer and marched forward.

You’ve built your own thread-safe array out of nothing but raw pointers, atomics, and vibes. You didn’t just avoid the Mutex<T> — you left it crying in a corner while your freelist did donuts in the parking lot.

You now understand:

- Why

compare_exchangeis the trust fall of systems programming. - How memory ordering is a terrifying choose-your-own-adventure baked into the CPU.

- And why

Ordering::Relaxedshould come not only with a warning label, but a tetanus shot.

Well, you could:

- Build a high-performance concurrent queue.

- Write a lock-free stack and impress exactly 1.5 people.

- Or start a band called ”

AtomicPtr<T>and the Race Conditions.”

But most importantly, you’ve learned that lock-free doesn’t mean worry-free. It’s a knife fight in a phone booth with physics and compilers — and you’re the one holding the spoon.

If you enjoyed this article or want to see the power of lock free data structures done right, please check out our product.

👀 SPECIAL OFFER: In honor of the raccoon: The next 100 sign ups with yeet installs will win a limited edition T-Shirt.

Appendix

Benchmarking Code

use std::sync::{Arc, Mutex};

use std::thread;

use std::time::{Duration, Instant};

mod lockfree;

use lockfree::LockFreeArray;

const ARRAY_SIZE: usize = 100;

const PRODUCERS: usize = 6;

const CONSUMERS: usize = 2;

const OPS_PER_PRODUCER: usize = 100_000;

const TRIALS: usize = 10;

fn run_lockfree_trial() -> Duration {

let array = Arc::new(Box::new(LockFreeArray::<usize, ARRAY_SIZE>::new()));

let mut handles = Vec::new();

let start = Instant::now();

for _ in 0..PRODUCERS {

let array = Arc::clone(&array);

handles.push(thread::spawn(move || {

for i in 0..OPS_PER_PRODUCER {

while array.try_insert(i).is_err() {

std::hint::spin_loop();

}

}

}));

}

for _ in 0..CONSUMERS {

let array = Arc::clone(&array);

handles.push(thread::spawn(move || loop {

for i in 0..ARRAY_SIZE {

let _ = array.take(i);

}

}));

}

for handle in handles.into_iter().take(PRODUCERS) {

handle.join().unwrap();

}

Duration::from_secs_f64(start.elapsed().as_secs_f64())

}

fn run_mutex_trial() -> Duration {

let vec = Arc::new(Mutex::new(vec![None; ARRAY_SIZE]));

let mut handles = Vec::new();

let start = Instant::now();

for _ in 0..PRODUCERS {

let vec = Arc::clone(&vec);

handles.push(thread::spawn(move || {

for i in 0..OPS_PER_PRODUCER {

loop {

let mut guard = vec.lock().unwrap();

if let Some(pos) = guard.iter_mut().position(|v| v.is_none()) {

guard[pos] = Some(i);

break;

}

}

}

}));

}

for _ in 0..CONSUMERS {

let vec = Arc::clone(&vec);

handles.push(thread::spawn(move || loop {

let mut guard = vec.lock().unwrap();

for val in guard.iter_mut() {

let _ = val.take();

}

}));

}

for handle in handles.into_iter().take(PRODUCERS) {

handle.join().unwrap();

}

Duration::from_secs_f64(start.elapsed().as_secs_f64())

}

fn summarize_trials(lockfree: &[Duration], mutex: &[Duration]) {

let lf_times: Vec<f64> = lockfree.iter().map(|d| d.as_secs_f64() * 1000.0).collect();

let mx_times: Vec<f64> = mutex.iter().map(|d| d.as_secs_f64() * 1000.0).collect();

let percent_diffs: Vec<f64> = mx_times

.iter()

.zip(&lf_times)

.map(|(m, l)| ((m - l) / m) * 100.0)

.collect();

println!(

"{:<10} {:>20} {:>25} {:>15}",

"Trial", "LockFreeArray (ms)", "Mutex<Vec<Option<T>>> (ms)", "Diff (%)"

);

for (i, ((lf, mx), diff)) in lf_times

.iter()

.zip(&mx_times)

.zip(&percent_diffs)

.enumerate()

{

println!("{:<10} {:>20.3} {:>25.3} {:>14.2}%", i + 1, lf, mx, diff);

}

let mean_lf = mean(&lf_times);

let mean_mx = mean(&mx_times);

let mean_diff = mean(&percent_diffs);

let std_lf = std_dev(&lf_times, mean_lf);

let std_mx = std_dev(&mx_times, mean_mx);

let std_diff = std_dev(&percent_diffs, mean_diff);

println!(

"{:<10} {:>20.3} {:>25.3} {:>14.2}%",

"Mean", mean_lf, mean_mx, mean_diff

);

println!(

"{:<10} {:>20.3} {:>25.3} {:>14.2}%",

"Std Dev", std_lf, std_mx, std_diff

);

println!();

if mean_lf < mean_mx {

println!(

"🏁 Winner: LockFreeArray (faster by {:.2}% on average)",

mean_diff

);

} else {

println!(

"🏁 Winner: Mutex<Vec<Option<T>>> (faster by {:.2}% on average)",

-mean_diff

);

}

}

fn mean(data: &[f64]) -> f64 {

data.iter().sum::<f64>() / data.len() as f64

}

fn std_dev(data: &[f64], mean: f64) -> f64 {

let variance = data.iter().map(|v| (*v - mean).powi(2)).sum::<f64>() / data.len() as f64;

variance.sqrt()

}

fn main() {

println!("Running {TRIALS} trials of producer-consumer workloads");

println!("Producers: {PRODUCERS}, Consumers: {CONSUMERS}, Array Size: {ARRAY_SIZE}");

println!("------------------------------------------------------");

let mut lockfree_times = Vec::new();

let mut mutex_times = Vec::new();

for _ in 0..TRIALS {

lockfree_times.push(run_lockfree_trial());

}

for _ in 0..TRIALS {

mutex_times.push(run_mutex_trial());

}

summarize_trials(&lockfree_times, &mutex_times);

}